How Congress can make the most of Schumer’s AI Insight Forums

Calls for Congress to do something about artificial intelligence have reached a fever pitch in Washington. Today, technology industry bosses, labor leaders and other luminaries will gather for the first of several so-called “AI Insight Forums” convened by Senate Majority Leader Charles E. Schumer, D-N.Y., to try to provide some answers.

Here are four modest suggestions for improving the odds that this political process leads to meaningful progress.

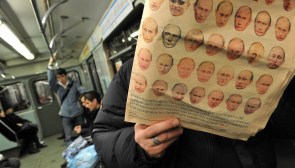

First, get the right people in the room. Schumer has invited a who’s who of technology bigwigs, including Elon Musk, Bill Gates and the CEOs of OpenAI, Microsoft, Meta, IBM and Alphabet to attend the first of his closed-door meetings. Summoning the tech sector’s top brass to Washington is good political theater, but it is not enough to deliver effective policy. As Meredith Whittaker, an AI expert and frequent tech industry skeptic put it, “this is the room you pull together when your staffers want pictures with tech industry AI celebrities. It’s not the room you’d assemble when you want to better understand what AI is, how (and for whom) it functions, and what to do about it.”

In future meetings, Congress should prioritize talking to engineers, ethicists, AI researchers, policy experts and others who are steeped in the day-to-day work of trying to build safe, ethical, and trustworthy AI. Understanding how different policy levers can be brought to bear on AI risks or where quirks of the technology are likely to impose limits on effective policy will be critical. This requires input from the working level.

The conversation should also be broader. Along with more representatives from academia and other corners of civil society, Schumer should invite a wider cross-section of businesses to participate. This should include representatives of banks, pharmaceutical companies, manufacturers and other firms that will be among the biggest users of AI, not just the leading tech companies.

Second, focus on concrete problems. There are plenty of risks to worry about with AI. Policymakers need to prioritize. Concerns that powerful AI could slip out of human control or lead to widespread job losses tend to dominate popular discussions of AI on social media.

Lawmakers should not ignore these more speculative concerns, but they should focus first on risks with clearly identified mechanisms for harm, where policy has the best chance of making a difference. These include the risk that poorly designed or malfunctioning AI systems could hurt people’s economic prospects or damage critical infrastructure and the risk that bad actors could use powerful, commercially available AI tools to design new toxins or conduct damaging cyberattacks.

Third, make use of existing tools before inventing new ones. It is a myth that AI is an unregulated free-for-all in the United States. Since 2019, the government’s strategy has been to apply existing laws to AI, while asking executive branch agencies to develop new rules or guidance where needed. Regulating AI in medical devices doesn’t require Congress to act. The Food and Drug Administration is already doing it. This bottom-up approach can be frustratingly slow and uneven, but it is also smart — because how and where a technology is being used matters a great deal when talking about its risks.

Congress should first ensure that government institutions that are already grappling with AI have the expertise, legal authority and motivation they need to do their jobs. It could then focus new legislation on gaps that aren’t covered by existing laws. A national personal data protection law would be an obvious place to start.

Congress should also consider strengthening existing structures for coordinating on AI policy across the federal government. This could include boosting funding for the National Institute of Standards and Technology, which has been doing groundbreaking work on managing AI risks on a shoestring budget, or empowering the White House Office of Science and Technology Policy and the National Science and Technology Council to play a more prominent role in setting the tone and direction of U.S. policy on AI and other complex technology challenges.

Such interventions may not attract the same headlines as creating a “new agency” to oversee AI, as some lawmakers and technology leaders have proposed. But they could help improve management of AI risks, while avoiding needless duplication.

Finally, and perhaps most importantly, Congress should acknowledge that regulating AI is a problem that will probably never be fully solved. The risks of AI – and government’s ability to respond to them – will change as the underlying technology, uses of AI, business models and societal responses to AI evolve. Policies will have to be revisited and revised accordingly. Whatever Congress decides to do about AI in the coming months, it should aim for a flexible approach.

Kevin Allison is the president and head of research at Minerva Technology Policy Advisors, a consulting firm focused on the geopolitics and policy of artificial intelligence, and a senior advisor at Albright Stonebridge Group.