US and UK release guidelines for secure AI development

U.S. and British authorities released guidelines Sunday for how to securely develop and deploy AI systems, the latest in a string of initiatives by Washington and London to address the security risks posed by machine learning technologies.

Developed by a coalition of cybersecurity and intelligence agencies together with technology firms and research organizations, the voluntary guidelines provide a set of recommendations to organizations about how to develop and build AI systems with security in mind.

The high-level document provides advice across design, development, deployment and secure operation and maintenance of AI systems. By applying concepts from cybersecurity — such as threat modeling, supply chain security and incident response — the document aims to encourage the developers and users of AI to prioritize security concerns.

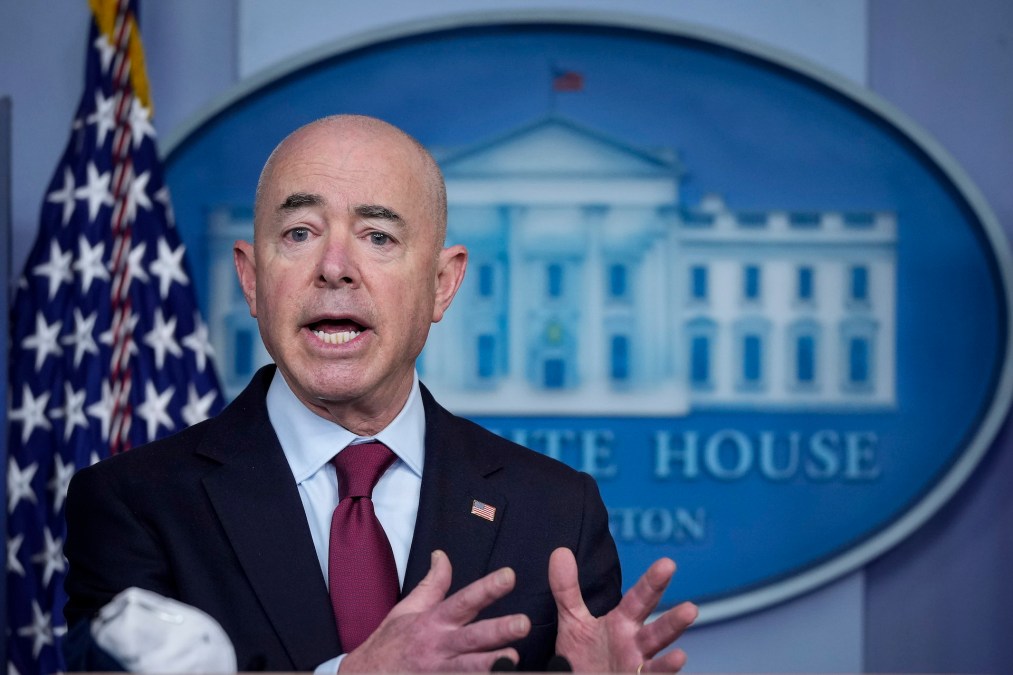

“We are at an inflection point in the development of artificial intelligence, which may well be the most consequential technology of our time. Cybersecurity is key to building AI systems that are safe, secure, and trustworthy,” Secretary of Homeland Security Alejandro Mayorkas said in a statement.

As AI systems are rapidly deployed across society, developers and policymakers are rushing to address safety and security concerns posed by the technology.

Last month, President Joe Biden signed an executive order directing the federal government to step up efforts to develop standards for addressing security concerns, particularly around red team models and watermarking AI-generated content. Earlier this month, the Cybersecurity and Infrastructure Security Agency released a roadmap for addressing the threat posed by AI to critical infrastructure. At a conference in London, a coalition of 28 states committed to subjecting leading AI models to intensive testing before release.

But experts caution that addressing the risks of AI continues to lag behind efforts to develop and deploy the cutting edge of the technology. Though thin on technical detail, Sunday’s standards aim to provide a set of principles that both AI users and developers can use to harden their systems.

CISA Director Jen Easterly called the guidelines “a key milestone in our collective commitment — by governments across the world — to ensure the development and deployment of artificial intelligence capabilities that are secure by design.”

CISA released the document together with the United Kingdom’s National Cyber Security Centre. The guidelines were authored by 21 security agencies and ministries spanning the G7 nations. A who’s who of AI firms — ranging from OpenAI to Anthropic to Microsoft — provided input, together with research organizations like RAND and Georgetown’s Center for Security and Emerging Technology.